Naming therapy in patients with post-stroke aphasia

Authors: Livia Popa, Oana Vanța

Keywords: post-stroke rehabilitation, anomia, naming therapy, multisensory cues, virtual reality

Introduction

After a stroke, one of the most common forms of speech impairment affecting recovering patients is deficient word-finding, or “anomia”. Being unable to name otherwise known things is limiting and can be frustrating, as it undermines the communication of even basic, everyday notions. As such, clinicians and scientists have worked extensively to understand the neurological underpinnings and design effective therapies. The specialized literature is extensive, making it difficult to decide upon the best approach. One substantial review looked at the results of 44 studies on semantic and phonological therapies, confirming once again the effectiveness of naming therapy. Consequently, there is no one perfect therapy for all patients, but rather the approach should consider the specificities of the selected population [1].

To better understand the post-stroke rehabilitation options, visit:

Moreover, check out our interviews with world-renonwned personalities in the domain of neurosciences:

Cueing therapies – background

“Cueing” refers to offering someone a clue, hint, sign, suggestion, indication, or direction. When communicating, humans cue each other frequently, both verbally and non-verbally, most often to enhance their messages or help their interlocutors articulate theirs. One may also be familiar with some of the many children’s games of guessing based on cues, but cueing can also be used therapeutically, such as in the clinical context of post-stroke rehabilitation.

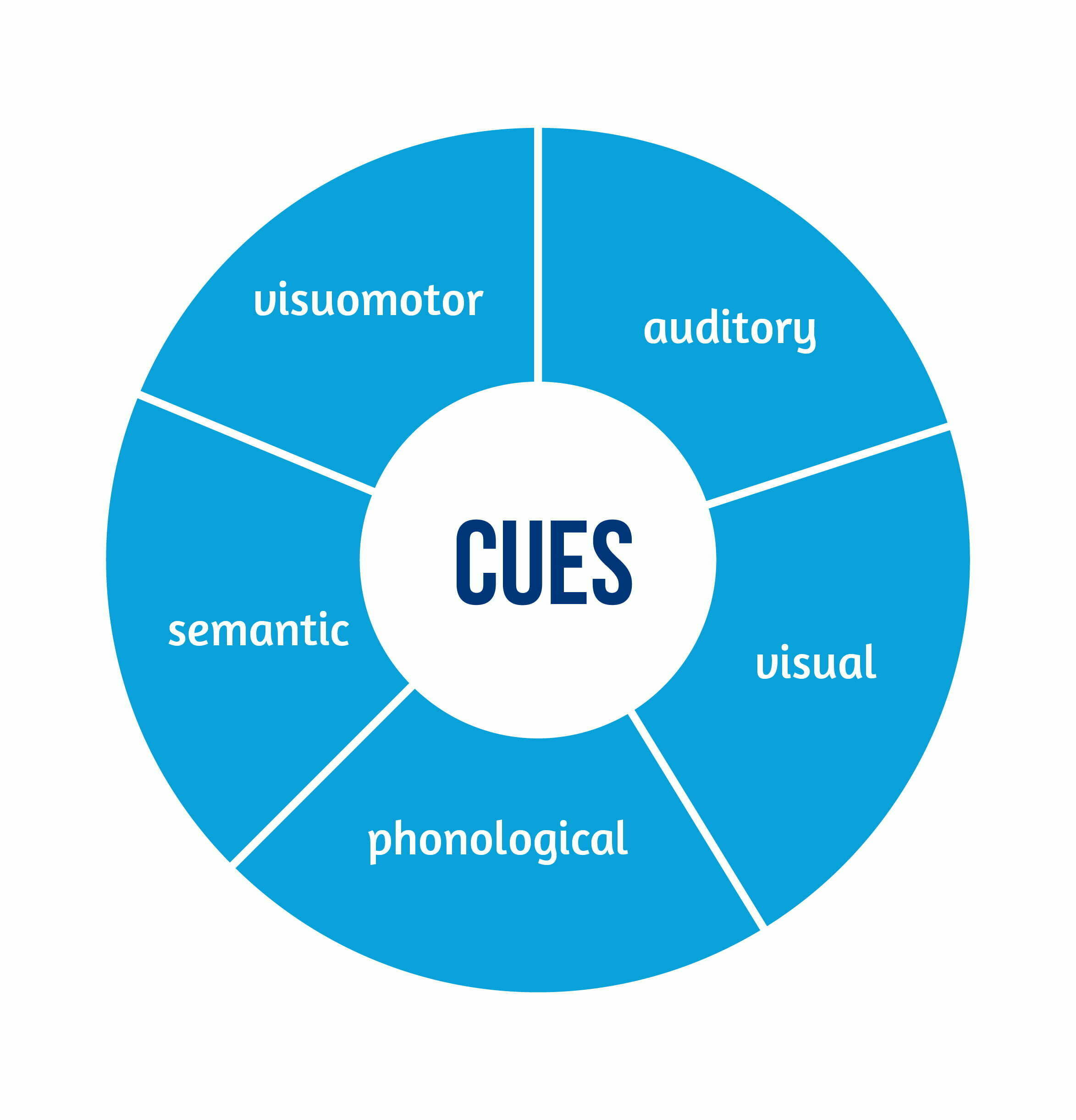

Cues can hint at how words are pronounced; for example, prompting someone to say the word “phone” may help to tell them that the word starts with the sound [f]. Those cues are phonological. Other cues can be semantic, in which case the hint is about the meaning of the word: for “phone”, a semantic cue could be “you use it to talk to people who are in a different location”. Other ways to suggest “phone” can be to have the patient hear a phone ringing (an auditory cue) or see a picture of someone holding their hand to the ear (a visual cue), or watch someone act (a visuomotor cue), but not limited to these.

Digitalizing cueing therapies

As digital technologies have permeated health care and daily life, one team of Canadian scientists sought to review available research into technology-delivered treatments for anomia. Using a thorough, systematic approach, they identified and analyzed 23 relevant studies, all of which showed that certain technology (e.g. computers, tablets) could be successfully used to better manage anomia as a post-stroke complication. Some therapeutic approaches required the trained participation of a clinician; some could be administered at home by the patients themselves. While the conclusion was clear as the patients got better at naming the items they had practiced with, the degree to which those gains could be generalized and transferred to everyday, real-life situations were less prominent, still [2].

Since this review, virtual reality (VR) applications to neurorehabilitation have been designed and tested.. From the perspective of human experience, VR is mostly about perceiving presence. For a computer-generated virtual reality to work, there must be vivid-enough sensorial stimulation and adequate fulfilment of perceptual predictions building on prior knowledge and experience with the material world. Importantly, enabling interactivity with(in) that environment is a defining feature of any successful VR system [3]. As a platform for neurorehabilitation therapies, VR promises to provide endless possibilities for engaging participants with digital objects and each other in sensorily compelling ways without the constraints of the material world.

Researching the benefits of cueing for patients with post-stroke aphasia

Of the relatively many studies into the benefits of computer-based naming therapies, the focus is on an experimental program conducted in 2016-2018 and described in 2020 in an international journal specializing in neuroscience and rehabilitation [4]. A team of Spanish and German researchers worked closely with ten consenting patients who had suffered a stroke 6 months to 12 years prior. The patients were five men and five women, 39 to 75 years old, all right-handed, three with moderate, and seven with severe non-fluent aphasia related to the stroke event [5].

To ensure the validity of the results, these were patients whose anomia could not be explained by other disorders and who did not suffer from any other cognitive or motor impairments. Also, they had not recently benefited from other intensive therapies and were not engaged in computer-based language practice at the time of their participation in the study.

The experimental therapy program consisted of two months of five weekly sessions for approximately 30-40 minutes each/ amounting to ~23 hours per patient of intensive, interactive practice with 120 words prompted using realistic 3D digital representations and two types of multisensory cues. The cueing strategies were integrated into a virtual reality game setup called the Rehabilitation Gaming System [5-7].

This neurorehabilitation approach uses digital technologies to enhance the well-established principles of Intensive Language Action Therapy, which can be summed up as motor action practice requiring intense concentration to reactivate language that has already been learned, but not recently used [8]. In a nutshell, two patients using two computers, VR headsets, and motion tracking sensors would play a game of verbal requests, selecting and handing to each other’s digital avatar virtual items depicting objects from the real world.

For half of the items, a therapist’s face was filmed while speaking each word, and the resulting clips were muted so that the cues would contain visual and motor information about pronunciation. These were the so-called Silent Visumotor Cues (SVCs). For the other half, semantically relevant sound clips were recorded to make up Sematic Auditory Cues (SACs) – see Table 1 below [5].

| A. Silent Visumotor Cues (SVCs) | B. Sematic Auditory Cues (SACs) |

| bed doughnut house milk peach table towel | airplane ball bee fan telephone typewriter vacuum cleaner |

Table 1. Examples of vocabulary items included in the experimental therapy program: A. Items associated with SVCs. B. Items associated with SACs, available from [7].

To better understand them, here are some examples, starting with an SVC. When one says “peach” in English, we see both lips touching at first, then quickly separating to produce the plosive sound [p], after which they are pulled sideways into a smile-like expression for the long vowel [i:], while the final sound [tʃ] is less visually obvious, as it is produced deeper inside the speaker’s mouth without involving the lips. Depending on the phonological similarities between words in a specific language, the difficulty of guessing based on such cues varies. In this case, the patients’ language was Spanish, so “casa” and “cama” would be harder to distinguish visually than when said in English (“house” and “bed”, respectively) [7].

Similarly, the difficulty of SACs can be varied depending on how commonplace the respective items are. For instance, in today’s world, it can be easier to think of an “airplane” when hearing the loud noise of a take-off than to recognize the sounds made by a “typewriter”, especially if the listener has never seen or used a “typewriter” before. Likewise, cars are ubiquitous, but musical instruments can be specific to certain parts of the world so that someone may be more familiar with the sound of a “banjo” than that of an “accordion” [7].

Results – significant progress overall, with cueing strategies most helpful in the beginning

Each game session was carefully monitored and recorded to produce detailed data for analysis, such as naming accuracy and successful interaction times. Notably, the patients were also tested on their ability to utter the trained vocabulary at baseline, every two weeks during the experimental two-month therapy program, and additionally after two more months, counting a total of six times over 16 weeks [5].

The key point of interest was the accuracy with which the patients could name the items throughout the studied period. In this regard, the results showed significant progress as early as two weeks into the therapy, and the patients continued to improve significantly throughout the intensive practice phase. These benefits were still statistically significant at the 16-week reevaluation [5].

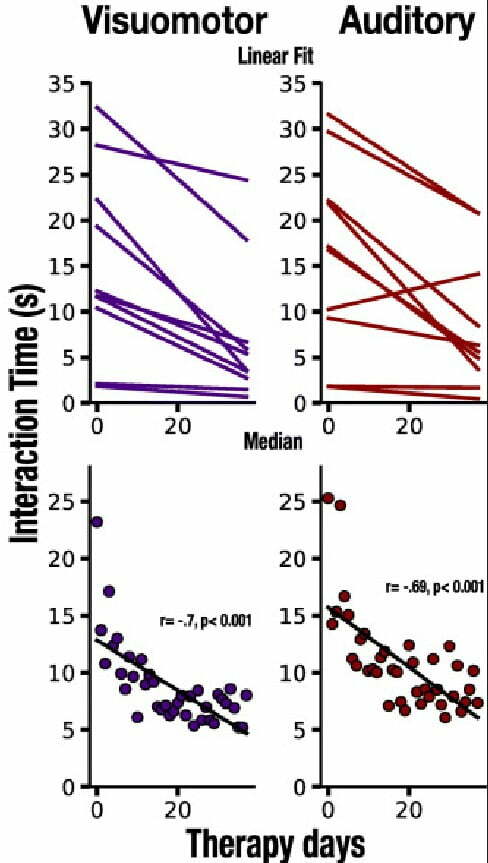

Also, as the patients advanced through the sessions and got increasingly better at naming the items, they got significantly faster. As a result, their interaction times noticeably decreased, as seen in Figure 2 below [5].

Naming accuracy and interaction times were also analyzed to assess overall communicative effectiveness. The researchers found that the progress in both areas was significantly correlated, suggesting that interaction time, which is easy to track automatically, can indicate reliably if patients with aphasia are getting better at verbalizing [5].

When the results were compared relative to the cued item sets (SVCs and SACs) and when cues were not used to stimulate the naming of a target item, the researchers made an interesting observation. In the early stages of the therapy, successful interaction was noticeably faster when multisensory cues were in play, regardless of type. However, by the end of the program, the patients had made significant progress with all items, and cueing had become inconsequential. This implies that cues are most helpful to begin to reactivate past knowledge and abilities in the early stages. Later on, the target vocabulary will eventually have to be updated for the patients to continue to improve [5].

Conclusions

To conclude, multisensory cues and targeted VR games take naming therapy to the next level. An important takeaway from this experiment done by Klaudia Grechuta and her colleagues is that even patients with chronic non-fluent aphasia, whose stroke events happened a long time ago, can benefit substantially from intensive naming therapy that blends well-established principles with technological innovations. The less a patient has had the chance or ability to name things using learned vocabulary, the more helpful multisensory cues will be to reactivate the patient’s related knowledge and speech function [5].

Non-specific, casual video gaming with family and friends does not work as a surrogate for attending a specialized program; in 2019, researchers pooled together the results of 30 randomized controlled studies investigating experimental VR-based rehabilitation programs for stroke survivors seeking to regain the kind of motor skills required for daily activities. Although this review did not focus on anomia specifically, what transpired from the aggregate data was that the most beneficial VR solutions for post-stroke patients are designed specifically with neurorehabilitation in mind, not off-the-shelf, recreational VR setups or even conventional, non-VR therapies [9].

Provided patients have the necessary basic equipment and a good-enough Internet connection, effective treatment programs can be delivered online with relatively minimal support. This would make targeted therapy scalable, affordable, and immediately accessible to patients unable to attend in person for whatever reasons. Moreover, clinical therapists could collaborate with language experts, mobile app developers, and VR content designers to create and share specialized libraries of 3D items and multisensory cues for different languages and with varying degrees of difficulty.

References

- Wisenburn B, Mahoney K. A meta-analysis of word-finding therapies in aphasia. Aphasiology 2009; 23(11):1338-1552; doi: 10.1080/02687030902732745

- Lavoie M, Macoir J, Bier N. Effectiveness of technologies in the treatment of post-stroke anomia: A systematic review. Journal of Communication Disorders 2017; 65:43-53; doi: 10.1016/j.jcomdis.2017.01.001

- Steuer J. Defining Virtual Reality: Dimensions Determining Telepresence. Journal of Communication 1992; 42:73-93; doi: 10.1111/j.1460-2466.1992.tb00812

- Verschure P. Virtual Reality Based Sensorimotor Speech Therapy; c2018; National Library of Medicine (US); Identifier NCT02928822; 2022 Jul 18; Available from https://clinicaltrials.gov/ct2/show/NCT02928822

- Grechuta K, Rubio Ballester B, Espín Munné R, Usabiaga Bernal T, Molina Hervás B, Mohr B, Pulvermüller F, San Segundo RM, Verschure PFMJ. Multisensory cueing facilitates naming in aphasia. J Neuroeng Rehabil 2020; 17(1):122; doi: 10.1186/s12984-020-00751-w

- Cameirão MS, Badia SBI, Oller ED, Verschure PFMJ. Neurorehabilitation using the virtual reality-based Rehabilitation Gaming System: methodology, design, psychometrics, usability and validation. J Neuroeng Rehabil 2010; 7:48; doi: 10.1186/1743-0003-7-48

- Grechuta* K, Rubio Ballester B, Espín Munne R, Usabiaga Bernal T, et al. Augmented dyadic therapy boosts recovery of language function in patients with non-fluent aphasia. Stroke 2019; 50(5):1270-1274; doi: 10.1161/STROKEAHA.118.023729

* Whenever you see this article cited with * in the text of the Digest, the reference is to the article’s Supplemental Material, available online at https://www.ahajournals.org/doi/suppl/10.1161/STROKEAHA.118.023729

8. Difrancesco S, Pulvermüller F, Mohr B. Intensive language-action therapy (ILAT): The methods, Aphasiology 2012; 26(11):1317-1351; doi: 10.1080/02687038.2012.705815

9. Maier M, Rubio Ballester B, Duff A, Duarte Oller E, Verschure PFMJ. Effect of Specific Over Nonspecific VR-Based Rehabilitation on Poststroke Motor Recovery: A Systematic Meta-analysis. Neurorehabil Neural Repair 2019; 33(2):112-129; doi: 10.1177/1545968318820169